Since December 2022, ChatGPT has been the talk of the town. This artificial intelligence chatbot is capable of answering questions and generating text in a reliable and accurate way that we've never really seen before. But between the fantasies, predictions and fears generated by this AI, there are plenty of possible uses, but also limits. Let's take a look at what ChatGPT really is.

On November 30, 2022, OpenAI released ChatGPT, an artificial intelligence chatbot capable of answering any question. The machine quickly went into overdrive. Millions of people test the tool: its possibilities, its limits and its pitfalls. And the hype continued, including at Frandroid. The result is confusion and, above all, untruths. To find out all you need to know about ChatGPT, look no further than here.

Our features on ChatGPT

We invite you to read our different files about ChatGPT, to learn more about the chatbot :

. How do I use ChatGPT and what does it do?

. Who is OpenAI, the creator of ChatGPT and Dall-E?

. Google Bard vs Bing Chat vs ChatGPT: which is the best generative AI?

. Alternatives to ChatGPT: it's not just OpenAI's chatbot that exists.

What is ChatGPT?

ChatGPT was introduced by OpenAI as an artificial intelligence model, interacting "in a conversational manner", enabling this tool to "answer follow-up questions, admit mistakes, challenge incorrect premises and reject inappropriate requests."

The simple definition of a chatbot

ChatGPT is what we call a "conversational agent", otherwise known as a chatbot. A chatbot is a computer program with which you can communicate in real time. Until now, chatbots were essentially basic algorithms for which pre-made answers were programmed according to certain questions. This tedious work undeniably limited the performance of these agents.

But with artificial intelligence, the possibilities are more or less endless. AI chatbots can answer more questions, longer and notably more complex.

How does ChatGPT work?

What if we told you that ChatGPT wasn't "intelligent" in the true sense of the word? That's what you might think if you take a very pragmatic look at what the tool does when it generates an answer. ChatGPT literally doesn't understand what you're saying or what it's writing.

In fact, ChatGPT is more of a prediction tool: its aim is to predict the next word in a sentence, based on all the other words before it. These other words are the indications you give it and the sentences it has written just before. It puts all this together with its training data, looking for something to write an appropriate answer to. It then generates the answers by predicting the words to match the context. ChatGPT doesn't do anything other than statistics and probabilities: what it asks itself is "what is the most likely word in this situation?" The chatbot then relies on its training data, which contains gigantic quantities of sentence sequences. Before sending the answer, the AI evaluates it and can adjust it.

What is GPT, the language model behind ChatGPT?

Behind ChatGPT is what's known as the Large Language Model (LLM). In a way, it's ChatGPT's "engine". In simple terms, a large language model is a linguistic model composed of a neural network that takes into account many parameters (the order of magnitude being in billions). These parameters form the syntax, vocabulary, punctuation and conjugation, and enable ChatGPT to write grammatically correct sentences.

These models are usually trained on large quantities of text. Training that is sometimes fully automated or supervised, or both at the same time. Whereas artificial intelligence models are usually trained for a specific task, large language models are not specialized, and their uses can be diverse. The more they are trained, the better they perform. This requires computing power, which means high-performance servers, and undeniably, money.

At present, the free version of ChatGPT runs on the GPT-3.5 language model, designed until early 2022 and trained on Microsoft servers.

How was ChatGPT trained?

The method used for training is called Reinforcement Learning from Human Feedback (RLHF). The GPT-3.5 model was trained in part by humans. They provided the AI with conversations in which they played both parts: the user and the chatbot. These responses were composed in part by the AI and the human trainers improved to show the AI how to respond.

Using a reward system for reinforcement learning, OpenAI collected data from the generated responses ranked by humans to refine the model. This was the case over several iterations of the process. On top of all this, ChatGPT feeds off the conversations it has with its users, except those who have chosen not to share their chat history.

How smart is ChatGPT?

From a practical point of view, ChatGPT is nothing more than a text completion tool. But as Mr. Phi pointed out in an excellent instructional video, it's partly intelligent. To a certain extent, the chatbot understands what it's being told, and can interpret what it's being told in a certain way, even though it's completely unaware of what it is and what it's doing.

This question of ChatGPT's intelligence generalizes to the rest of artificial intelligence and raises the question: are AIs intelligent? The term "artificial intelligence" is partly a misnomer: if we use it, it's mainly because the "thinking" patterns of these computer systems are similar to those of our brains. That's why they're called "neural networks". Beyond AI, the question of its intelligence is necessarily linked to our definition of this concept.

What does ChatGPT mean?

ChatGPT is a combination of the terms "Chat" and "GPT". The former means "discussion" in French, while the latter stands for "Generative Pre-Trained Transformer". These are in fact the initials of the large GPT language model we mentioned earlier.

GPT is not a brand specific to OpenAI, since the term refers to a certain type of language model: those trained with a lot of text and used primarily to generate text.

How many people use ChatGPT worldwide?

Everyone has heard of ChatGPT, but that doesn't mean the chatbot is used by everyone. As reported by Similarweb, a website analytics tool, OpenAI's creation grew by 132% in January, slowing down since then. As proof of this, its growth is said to have been just 2.8% compared to April. Even so, ChatGPT accounted for 1.8 billion visits worldwide in May, which is huge for a site launched in November.

It is difficult to obtain statistics on the number of individual users, as OpenAI does not communicate on this subject. According to Similarweb, over 206 million unique users logged on to ChatGPT in April. All this would make ChatGPT the seventeenth most visited website in the world in May 2023, at around 300 million visits from TikTok, for example.

ChatGPT's limitations

Despite ChatGPT's ability to generate human-like texts, the chatbot has serious limitations that prevent it from being deployed on a larger scale.

Does ChatGPT have Internet access?

ChatGPT has been trained with a huge dataset, a large part of the Internet, which ends in September 2021. It has no knowledge of what happened afterwards. The most basic examples of this are when someone posts him questions about the war in Ukraine or the results of the last French presidential elections. ChatGPT has no knowledge of these events, and therefore can't comment on them.

Can ChatGPT make things up that aren't true?

While most of the time ChatGPT writes plausible answers, they can quickly become factually incorrect. Worse still, the chatbot can sometimes "hallucinate" and talk nonsense. This is the case when it is asked for books on a particular topic: if the authors are correct, they have never written the books put forward by the AI.

You can also find this out by asking it to perform arithmetic operations: very often, the chatbot gets it horribly wrong, worse than a dunce. In reality, this is perfectly normal: it's a language model, designed for linguistics, not mathematics.

How good is this chatbot?

If you've tested ChatGPT, you'll have to admit that, at first glance, the tool is pretty impressive, and we've never seen anything like it. But a closer look reveals a number of limitations. First of all, the lexical field: if you don't give any indication of tone or vocabulary, ChatGPT lacks personality and is smooth as can be. The chatbot can also overuse certain unoriginal sentence constructions. It also tends to remind us that it's an artificial intelligence chatbot created by OpenAI.

ChatGPT Plus: how does it work?

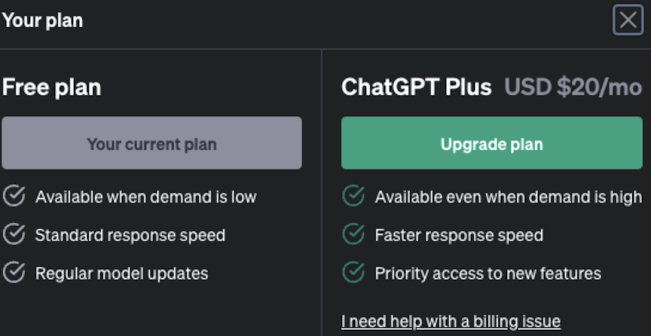

ChatGPT's paid subscription package is called ChatGPT Plus. OpenAI wants to reassure anyone who will listen that ChatGPT will remain free. The subscription is currently priced at $24 per month, or around €22.50 at current exchange rates.

What's the difference between the free version of ChatGPT and ChatGPT Plus?

The subscription allows access to the chatbot even when demand is high and the risk of server saturation is present. However, while this may have been useful a few months ago, ChatGPT is now available continuously. For those in a hurry, the Plus subscription offers faster response times.

OpenAI adds that ChatGPT Plus provides early access to new features. This is now the case, as you can use GPT-4, the latest version of the language model developed by OpenAI. In most cases, GPT-4 and ChatGPT Plus in general are not useful, and the free version is more than adequate.

How to use GPT-4

The latest version of OpenAI's large language model, GPT-4, is reserved exclusively for ChatGPT Plus subscribers. Once you've subscribed, a selector in the ChatGPT menu lets you choose between the two language models deployed.

The only disadvantage of GPT-4 compared with GPT-3.5 is that it is slower: for the simplest tasks, therefore, prefer the penultimate version of GPT.

What's the difference between GPT-4 and GPT-3.5?

When it comes to computer programming, for example, GPT-4 makes fewer programming errors and is generally more efficient in this area, as its knowledge and skills are more extensive. This is because it is better "for tasks requiring creativity and advanced reasoning", according to its designer.

In fact, in most cases, the difference between the two versions is not necessarily perceptible. On top of all this, GPT-4 can describe images in great detail. But even with all these changes, knowledge is still limited to September 2021.

Plugins: how to harness the full power of the chatbot

The problem with ChatGPT is that the chatbot is rather limited to its single site or mobile app. What would be nice would be to be able to connect it to other services. Generative AI tends to be integrated into our office suites: this is the case with Microsoft's Copilot and Google's various Workspace tools.

Fortunately, there is currently a way to connect ChatGPT with other tools, via plugins, available with ChatGPT Plus only. This feature, still in beta, allows you to connect your AI to the Internet, for example, to get answers from search results. Others allow you to interpret computer code or plan your travels.

The dangers of ChatGPT

Even with its major limitations, ChatGPT is a source of concern in many areas. Its dangers are manifold, from ongoing cheating to hacking.

The use of generative AI by pupils and students

The automatic generation of text in a few seconds from a single question is an attractive idea for many pupils and students, who are mainly asked to write, although this is not the case in all subjects. But ChatGPT can also be used for presentations and homework. The first case of cheating in France was detected at the Université Lyon 1: half of a class used the chatbot to write their homework.

If academic and legal uncertainty is the order of the day, it's undeniable that the pedagogical value of the practice can be called into question. Especially as the texts generated are unique, they are not detected by the anti-plagiarism tools used by universities. On the other hand, in some cases ChatGPT is not good enough for the level expected. Moreover, as mentioned above, its constructs and vocabulary are fairly simplistic, which can make it easy to spot its use.

What ChatGPT could change in teaching is what learners are asked to do: more thought could be given to discouraging the use of ChatGPT, or at least reducing it. Among the options under consideration are the creation of a digital trace in texts generated by ChatGPT, or a history that would enable reverse search. What is certain is that during a real test, the majority of pupils and students don't have a computer at hand, and if they do, it's without the Internet.

Pollution from Internet content

Most website traffic comes from search engines, especially Google. Appearing in search results is therefore the key to generating more traffic. The methods used to achieve this are known as SEO, for Search Engine Optimization. One of the most effective practices is to publish lots of content. The problem is, it's expensive: you have to hire editors and journalists.

Until a few months ago, some sites with little regard for the quality of their content had found a way to do this: take content from another language and translate it. Indeed, tools such as DeepL or Google Traduction are very good at this. But today, ChatGPT has gone one step further.

The Internet is filling up more and more with automatically generated content, obviously generated by ChatGPT. What's more, some sites are powered solely by articles written by AIs. In the long term, it could even be a case of the snake biting its own tail: AIs base themselves on content published on the Internet and draw their wealth from it. But if the content from which they draw their inspiration has been generated, they could find themselves impoverished.

On the other hand, some media players are stepping up their use of AI, to the detriment of real journalists. This is the case at Cnet (not to be confused with our colleagues at Cnet France): the North American section is said to have laid off 50% of its staff dedicated to news writing, reported L'ADN last March.

Bad advice for young people

A controversy arose in March concerning ChatGPT, or rather its language model. GPT-3.5 was integrated into Snapchat via a bot called My AI. It appears in the application like any other contact and can be freely exchanged with. However, in its early days, it gave sordid advice to younger users.

A user pretended to be a thirteen-year-old girl, and My AI didn't find it problematic that she left with a thirty-year-old adult in another country and even had sex with him.

Helping to create computer viruses

The chatbot makes it easy to create and even correct programs. Undeniably, it can be used for dishonest purposes, such as writing a malicious script. That's what one researcher managed to do: create malware using ChatGPT.

Of course, OpenAI has put restrictions in place to prevent such use, but these have been exceeded. The researcher in question had ensured that the chatbot responded by writing separate lines of code.

Phishing made more automated

You're bound to have received phishing e-mails. These are messages posing as institutions or companies: the Post Office, the tax authorities, Netflix, Amazon, Colissimo and so on. Each time, they ask for personal information: identity documents, bank card codes, etc.

One simple way of identifying them is to check spelling, syntax and phrasing. It's often easy to spot mistakes and avoid falling for them. But ChatGPT could help you to write these fake e-mails, making them look official, personalizing them, etc. On this subject, OpenAI doesn't seem to set any limits, since it's perfectly possible to generate this type of message.

Can ChatGPT replace us?

This is another big question on everyone's lips: can ChatGPT replace jobs? While this may be the case in journalism and web editing, what about other professions? OpenAI itself asked the question in a scientific study published last March.

These reveal "that around 80% of the US workforce could see at least 10% of their tasks affected by the introduction of LLMs, while around 19% of workers could see at least 50% of their tasks affected." In total, "about 15% of all workers' tasks in the U.S. could be completed much faster at the same level of quality." The scientists who conducted this study do not, however, put forward a timetable for the adoption of text-generating AI. Still, they augur that these language models "could have considerable economic, social and political implications."

As for the investment bank Goldman Sachs, it believes that "the labor market could be severely disrupted."

While maintenance workers, factory workers and craftsmen won't be affected, AIs like ChatGPT could have an impact on more computerized professions, such as accounting, administration and communications. An impact on productivity: like IT, the arrival of the Internet and specialized software, ChatGPT could boost productivity in a number of professions.

Technically, ChatGPT could replace a number of professions. But the question of replacing humans is a different one: should we eliminate jobs, some of which are no longer needed, or reduce the working time of each person in view of the productivity gains? This is more of a societal issue than a technical one.

What is the carbon footprint of a ChatGPT question?

Calculating the carbon footprint of an IT department is extremely complicated: there are many factors involved in greenhouse gas emissions, and these are not necessarily easy to measure. Nevertheless, some estimates can be made.

In a study published last April, carbon accounting firm Greenly put forward an estimate of ChatGPT's carbon consumption, based on GPT-3. To do this, Greenly took "as an example the case of a company using it to automatically reply to 1 million e-mails per month for one year", i.e. 12 million e-mails. It concluded that ChatGPT would have "emitted 240 tonnes of CO2e (CO2 equivalent, a measure of greenhouse gas emissions), the equivalent of 136 return trips between Paris and New York."

What would consume the most with ChatGPT is, of course, the electrical operation of the servers on which the chatbot runs. In the case studied by Greenly, this would represent around 75% of the total carbon footprint. One solution to mitigate (not reduce) ChatGPT's carbon impact is... to use it as much as you can. In fact, learning tasks account for 99% of carbon emissions: the more you use the tool, the less energy its design will have consumed.

Exploiting workers for labelling purposes

ChatGPT also raises questions about the way in which the chatbot was developed. Last January, a Time investigation implicated OpenAI, or rather one of its subcontractors, for exploiting workers. Kenyans were paid less than two dollars an hour to make the AI less toxic.

In fact, these workers were labeling texts to see if they should be used to train the language model. The problem was that the texts in question could speak of atrocious violence, and some employees developed psychological disorders without being monitored. All this while being paid far less than promised. OpenAI shifted the blame to its subcontractor following publication of the investigation.

The (financial) valuation of the work of those who train artificial intelligence models such as GPT is becoming an increasingly important issue. Firstly, because artificial intelligence represents an ever-increasing financial windfall. Secondly, because training is an essential step in the creation of AI. However, a recent study has shown that freelance workers, most of whom are underpaid, can use AI to carry out micro-training tasks.

Could artificial intelligence lead to a shortage of graphics cards?

To run ChatGPT and, more generally, all other generative artificial intelligences, you need computing power, and in particular GPU power, supplied by graphics cards. After numerous phases of shortages linked to cryptocurrency mining, but also component shortages and factory blockades due to health restrictions, the market was tending to rebalance. But according to some studies, generative AI could lead to yet another graphics card shortage.

For example, to train and maintain ChatGPT would have required no less than 10,000 Nvidia parts. If only OpenAI does this, no worries and no risk of not finding the card you want. On the other hand, if the models and tools tend to multiply, as is the case right now, sourcing could be more complex. And yet, there's a big winner behind this industry: Nvidia, the historic graphics card manufacturer and a very powerful company.

Can AI-generated text be detected?

At present, there is no very reliable way of detecting whether a text has been generated with an AI like ChatGPT or not. There are, however, methods that can be used to identify generated texts:

. A poor lexical field

. Very smooth speech

. Repetitive sentence forms

Tools are available, however. One of these is made available by OpenAI, following strong criticism that it was impossible to check whether a text had been generated by ChatGPT. This is AI Text Classifier, which you can try out for yourself. This tool requires a minimum of 1000 characters for analysis. OpenAI acknowledges that this "classifier" is not always reliable, and that text generated by AI can be modified to escape its analysis. At the time of the tool's launch, OpenAI wrote that the "classifier correctly identifies 26% of AI-written text (true positives) as 'probably AI-written', while incorrectly labeling human-written text as AI-written in 9% of cases (false positives)." Finally, it is less good on writing written by children or in languages other than English.

In practice, the AI Text Classifier doesn't directly say whether a particular text was generated by an AI: "each document is labeled as very improbably, improbably, unclear whether it was, possibly or probably generated by the AI." Indeed, in our tests, the tool sometimes gets it wrong.

Should ChatGPT and all other language models be put into everyone's hands?

In March, the source code for LLaMA, Meta's language model, was leaked. Anyone can make it work, provided they have access to the code and a sufficiently powerful machine. While it's a good thing that anyone can use a language model without depending on a private company, it also poses a number of potential dangers.

As mentioned above, ChatGPT and language models can be used for dishonest purposes. However, they are limited by the fact that the companies that edit them develop solutions to control the use of their creation and moderate what LLMs write.

However, it's not reassuring that only large digital companies control these AIs: we're talking about Google, Microsoft, and now Meta. What's more, we know very little about what's inside these black boxes, or how they work. Finally, it's worth pointing out that running these models is very expensive in terms of computing resources and therefore financial resources: not everyone can use all the power of LLMs themselves.

The future of ChatGPT

Now that we've covered everything that makes ChatGPT the most powerful chatbot, let's take a look at what the tool will look like in the future.

A "Business" subscription for professionals

On one of ChatGPT's FAQ pages, OpenAI says it's working "on a new ChatGPT Business subscription for professionals who need more control over their data, as well as for businesses looking to manage their end users."

The aim will be to offer a package in which user data is not used by default to drive GPT. No roll-out date has been announced, other than that it will happen "in the coming months".

Integrating generative AI into video games

Several other future uses are being tested for ChatGPT. One of these is the use of language models in video games, to make characters talk.

For example, a mod for the game Mount and Blade II: Bannerlord has been developed, based on ChatGPT, to enable natural chat with characters. Some limitations remain, including response times, which break the immersion in the game.

When will GPT-5 be released?

After GPT-4 comes GPT-5. However, this doesn't mean that the next version of the language model behind ChatGPT is due out any time soon. OpenAI founder Sam Altman has repeatedly stated that his company is not actively developing GPT-5. He acknowledges that his company has some ideas, but nothing concrete.

Sam Altman would like to increase security requirements, and has emphasized the need for independent auditing and testing. OpenAI could also opt for an intermediate version of its LLM, with GPT-4.5, as was the case with GPT-3.5. This is a strategic position for the company: it would be tricky for OpenAI to rush ahead with GPT-5 at a time when criticism of generative AI is mounting, particularly from a safety point of view. On top of all this, legislation is evolving and could have an impact on ChatGPT and its development.

What regulations are in place for ChatGPT?

ChatGPT and, more generally, all language models and generative artificial intelligences are relatively recent. As a result, their use and development are not necessarily sufficiently regulated. That's why legislation around the world is evolving to take these new tools into account. We know that the American FTC and the British CMA, two competition authorities, are currently studying the potential consequences of ChatGPT.

Will the revolution in voice assistants involve large-scale language models?

What ChatGPT could revolutionize is voice assistants. In our community of readers, a quarter of you use a voice assistant on a daily basis, and around half never use one. The utopia of an intelligent personal assistant sold a few years ago is no more: today, Siri updates are scarce, Google Assistant hasn't changed in a long time, Alexa is reportedly mocked internally and Bixby is in a Schrödinger's box. The line is somewhat extreme, but the point is that voice assistants are still far from fulfilling their promise.

Yet ChatGPT could revolutionize them forever. Although the information it gives is not necessarily reliable, it always manages to give an answer, and its capabilities are impressive, all quite quickly. Coupled with an "Internet connection", it could well be integrated into voice assistants to generate answers. Demonstrations are already underway: the ChatGPT app on iOS enables voice command via Siri. This enables the chatbot to be invoked by voice alone, while receiving a voice response. German automaker Mercedes is also testing the integration of ChatGPT in its vehicles for its voice assistant.

To keep up with us, we invite you to subscribe via email so you don't miss out on any content.

0 Comments